Fun With Grid Engine Topology Masks -or- How to Bind Compute Jobs to Different Amount of Cores Depending where the Jobs Run (2013-01-09)

A compute cluster or grid often varies in the hardware installed. Some compute

nodes offers processors with strong compute capabilities while others are

older and slower. This becomes an issue when parallel compute jobs are

submitted to a job/cluster scheduler. When the jobs runs on slower

hardware it should use more compute cores than on faster cores. But during

submission time the parameters (like the amount of cores used for core

binding) are often fixed. An obvious solution is to restrict the scheduler

that the job can only run on a specific cluster partition with similar

hardware (like in Grid Engine a queue domain requested with -q

queue@@hostgroup). But the drawback of this approach is that the

cluster is not fully utilized while jobs are waiting for getting a fast

machine.

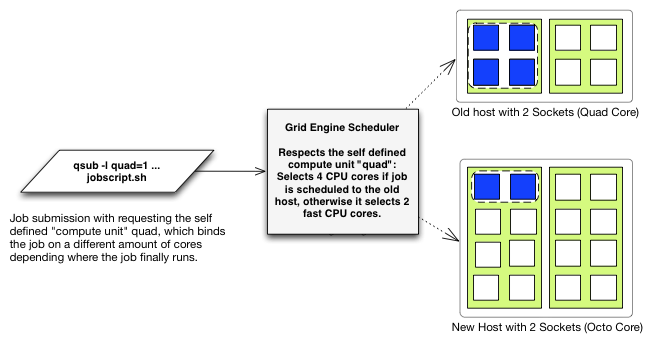

An alternative would be to exploit the new Grid Engine topology masks

introduced in Univa Grid Engine 8.1.3. While with the core binding

submission parameter (qsub -binding) a job requests a specific fixed

amount of cores for the job, the topology mask bind the core

selection request to the resource (i.e. execution machine the scheduler selects). Hence the Grid Engine scheduler can translate a virtual entity into the machine

specific meanings. That‘s a bit abstract, hence an example should

demonstrate it:

We assume that we have two types of machines in our cluster, old two

socket quad-core processors and new octo-core machines also with two

sockets. The workload creates lots of threads or can create as much

threads as processors are available (during job execution the processors

can be get from the $SGE_BINDING environment variable). What we can do now

is defining a virtual compute entity, which expresses some compute power.

Let‘s call it quads here. Since the old compute cores are slower than the

new one you can define that a quad for the old machines are all four cores

of processor, while on the new host a quad is equivalent to two cores (or

one core if you like).

All what you have to do is to define the new compute entity quad in the Grid

Engine complex configuration as a per host consumable with type

RSMAP (resource map).

qconf -mc

quad qd RSMAP <= YES HOST 0 0

Now we need to assign the quads to the hosts. This is done in the host configuration. At an old host a quad represents 4 cores. Hence 2 quads are available per host.

qconf -me oldhost

complex_values quad=2(quad1:SCCCCScccc quad2:SccccSCCCC)

Now we have two quad resources available: quad1 which is bound to the 4

cores on the first socket and quad2 which is available on the 4 cores of

the second socket.

The same needs to be done on the new hosts, but now a quad are just two CPU

cores.

qconf -me newhost

complex_values quad=8(quad1:SCCccccccScccccccc quad2:SccCCccccScccccccc \

quad3:SccccCCccScccccccc quad4:SccccccCCScccccccc \

quad5:SccccccccSCCcccccc quad6:SccccccccSccCCcccc \

quad7:SccccccccSccccCCcc quad8:SccccccccSccccccCC)

This looks like a little work for the administrator but afterwards it is

very simple to use. The user just has to request the amount of quads he

wants for the job and finally the core selection and core binding for the job is automatically done. The scheduler selects always the first free quad in the order of the complex values definition. Following job

submission demonstrates it:

qsub -l quad=1 -pe mytestpe 4 -b y sleep 123

In order to force all jobs to use this compute entity quad, a JSV

script could be written which adds the request to all jobs

(while it disallows any existing core binding requests).

When now the job runs on a new host it is bound on two cores, while when

the job is scheduled by Grid Engine to an on old host it runs on four

cores. Of course you can also request as many quads as you like. NB: When

requesting more then 2 quads per job, the job can‘t be scheduled to an old

host. It will run only on new hosts. The quads are a per host requests,

meaning that on each host the job runs that amount of quads you had

requested are chosen (independent from the requested and granted amount of

slots on this host).

This feature is unique in Univa

Grid Engine and was introduced in UGE 8.1.3.