Add Any Resource to Gridware Cluster Scheduler / Open Cluster Scheduler (fka Sun Grid Engine) (2025-12-22)

GCS/OCS provides a flexible resource management system that has been refined over many years. Integrating new resources—whether quantum computers, tape libraries, cloud instances, hardware emulators, or something else entirely—follows a consistent pattern. Here's how it works.

Step 1: Define the Resource Type

Resources are defined in the complex configuration. To see what's already configured:

qconf -sc

To add new resources, you have two options:

Interactive editing:

qconf -mc

File-based editing:

qconf -sc > myresources

# edit myresources

qconf -Mc ./myresources

Each resource definition requires several attributes:

- name and shortcut – how you refer to it

- type –

MEMORY,INT,DOUBLE,BOOL,RSMAP, etc. - requestable – whether jobs can request it via

qsub -l - default – typically

0; note that a non-zero default gets implicitly added to every job request - consumable – whether the scheduler should decrement the available amount when scheduling jobs

Step 2: Initialize Resource Values

This step is optional but necessary for limited, consumable resources like GPUs or licenses. The key decision is where to initialize (within the complex_values field):

Global level – resource is available cluster-wide, independent of where the job runs:

qconf -me global

Host level – resource is attached to a specific execution host:

qconf -me <hostname>

Queue instance level – finer granularity than host; useful when different queues on the same host have different resource allocations:

qconf -mq <queuename>

In each case, add your resource to the complex_values field. For queue-level configuration, you can either set a value for all instances or use bracket notation to target specific queue instances on specific hosts.

Check the resource availability with qstat -F resourcename

Step 3: Request the Resource

Jobs request resources using the -l option:

qsub -l qpu=1 myjob.sh

To inspect what was requested and granted:

qstat -j <jobid>

The job environment receives granted resources as variables with the SGE_HGR_ (hard) or SGE_SGR_ (soft) prefix. Your job script can use these to configure itself appropriately.

Further Integration: Prolog and Epilog Scripts

For many resources, there's additional work required to actually access or release them. This is handled through prolog and epilog scripts configured in the queue configuration.

Prolog scripts typically:

- Read

SGE_HGR_*environment variables (which display the (hard) granted resources) - Interact with the resource (acquire locks, initialize hardware, provision cloud instances)

- Write additional environment variables to the job's environment file

Epilog scripts typically:

- Release or de-register the resource

- Collect consumption metrics (power usage, costs, runtime statistics)

- Update the resource usage file so consumption data appears in

qacct -joutput

This last point integrates directly with the extensible JSONL accounting system, making resource consumption visible and auditable.

The Starter Method

Another frequently used hook is the starter_method. Configure it in the queue with a path to a script or binary. Instead of executing the job directly, the execution daemon calls this starter script with the job script and its arguments as parameters.

From there, it's up to your starter script to:

- Prepare the environment

- Perform any setup required for the resource

- Launch the actual job as a child process

Since the starter script inherits all job-related environment variables (including granted resources) and receives the job command line as arguments, it has everything needed to wrap job execution transparently.

A common use case: running jobs inside containers without requiring users to modify their job scripts. The starter method can invoke Apptainer, Enroot, or any other container runtime, passing the job script as the container's entry point. Users submit normal jobs; the infrastructure handles containerization.

Load Sensors

Load sensors provide dynamic information about resource states—temperatures, shared storage capacity, network utilization, or any other metric that changes over time.

They're scripts or binaries that the execution daemon triggers at configurable intervals. Each invocation reports the current value of one or more resources. These values can be provided whether or not the resource is initialized with a static value at the global, host, or queue instance level.

Load sensor data is useful for:

- Users checking cluster status before submitting jobs

- Administrators monitoring infrastructure health

- The scheduler detecting overloaded resources and automatically putting them into alarm state

With these interfaces—complex configuration, prolog/epilog scripts, the starter method, and load sensors—you can integrate any resource, independent of what that resource actually is.

Gridware Cluster Scheduler / Open Cluster Scheduler 9.0.10 Available (2025-12-15)

Gridware Cluster Scheduler 9.0.10 based on Open Cluster Scheduler 9.0.10 (fka. Grid Engine) is now available. This is a patch release with fixes accumulated over the last month.

If you want to give Open Cluster Scheduler a try in a VM or container, the one-line installer has been updated as well. If you want to test a multi-node setup within local containers, the repo now contains an example based on Docker Compose.

git clone https://github.com/hpc-gridware/quickinstall

cd quickinstall/containers/openSUSE/15.6

docker compose up -d

Wait a short while until installation is finished (1–2 minutes).

docker exec -it ocs-master bash

ocs-master:/ # su - gridware

gridware@ocs-master:~> qhost

HOSTNAME ARCH NCPU NSOC NCOR NTHR LOAD MEMTOT MEMUSE SWAPTO SWAPUS

----------------------------------------------------------------------------------------------

global - - - - - - - - - -

ocs-master lx-arm64 14 1 14 14 0.33 11.7G 1.4G 12.7G 0.0

ocs-worker1 lx-arm64 14 1 14 14 0.34 11.7G 1.4G 12.7G 0.0

ocs-worker2 lx-arm64 14 1 14 14 0.34 11.7G 1.4G 12.7G 0.0

gridware@ocs-master:~> qsub -b y sleep 123

Your job 1 ("sleep") has been submitted

gridware@ocs-master:~> qstat -f

queuename qtype resv/used/tot. load_avg arch states

---------------------------------------------------------------------------------

all.q@ocs-master BIP 0/1/14 0.32 lx-arm64

1 0.55500 sleep gridware r 2025-12-15 10:14:43 1

---------------------------------------------------------------------------------

all.q@ocs-worker1 BIP 0/0/10 0.32 lx-arm64

---------------------------------------------------------------------------------

all.q@ocs-worker2 BIP 0/0/10 0.32 lx-arm64

gridware@ocs-master:~> qstat -j 1

...

To munge or not to munge (2025-11-6)

Gridware Cluster Scheduler supports munge authentication in daily builds since a while, bringing enhanced security to containerized workloads. This widely-adopted service runs as a separate daemon, verifying real UID/GID for cluster tools like qsub and qrsh across multi-node environments.

The security benefits are particularly valuable when allowing user namespaces for containers, ensuring safe operation without authentication compromises. Best of all, installation couldn't be simpler—just add the -munge flag to your installer command:

./inst_sge -munge -m ...

For anyone running containerized HPC workloads, munge support is highly recommended and easy enough that the answer to "to munge or not to munge" is clearly: munge.

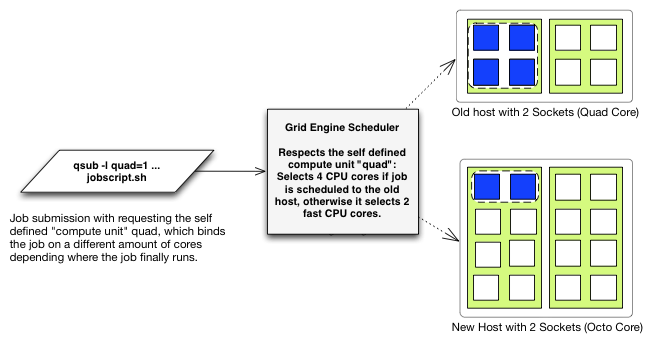

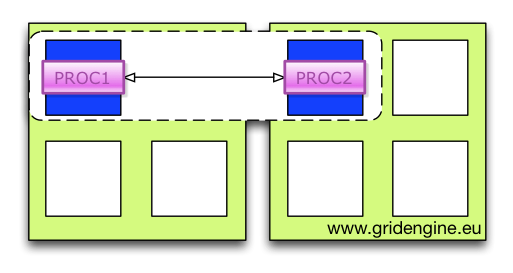

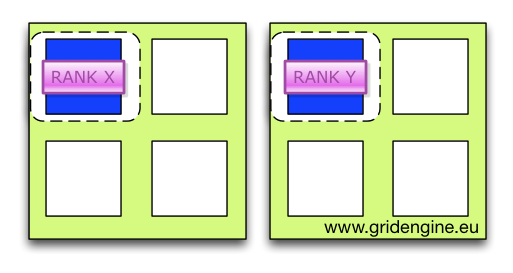

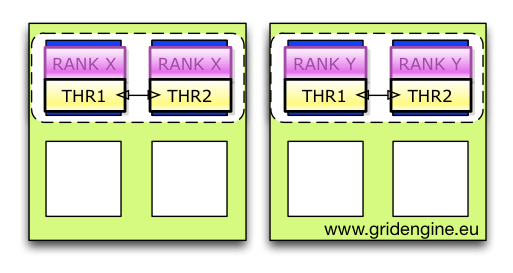

Multi-Node Concepts: From Grid Engine Legacy to the AI Age (2025-09-29)

Grid Engine introduced the parallel job concept to the scheduler domain decades ago, laying the foundational groundwork. In today's AI age, multi-node computations are the essential building blocks that allow us to train, finetune, and run inference at scale.

But the complexity hasn't disappeared—it's just shifted. When you need to understand the precise internal concepts behind robust, scalable multi-node job orchestration in modern environments, please check out my latest post over at hpc-gridware.com.

We take a deep dive into the Gridware Cluster Scheduler / Open Cluster Scheduler machinery that makes distributed computing reliable:

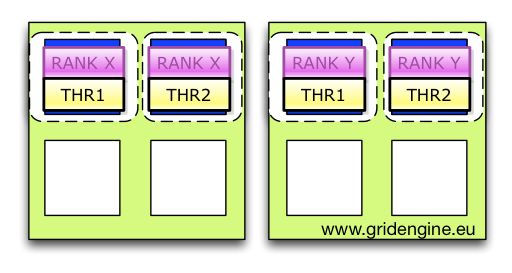

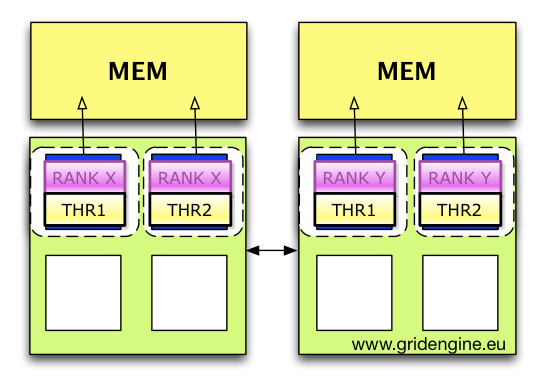

- PEs and Allocation Rules: How the Parallel Environment dictates slot distribution (e.g.,

$fill_upvs.$round_robin) and controls your resource footprint. - The Consumable Logic: A detailed look at how to define and request resources using different

consumablescopes (YES,HOST,JOB) to manage everything from memory to licenses. - Controlling the Slaves: The critical role of

qrsh -inheritandcontrol_slavesin enforcing per-node resource limits and ensuring complete job cleanup. - RSMAP for Specialized Resources: Managing non-uniform resources like GPUs, network devices, and ports with the powerful RSMAP resource type.

This is the technical knowledge required to move your multi-node jobs from basic execution to optimized, production-grade workflows.

Read the full post on multi-node job concepts: here

From Sun Grid Engine to Gridware Cluster Scheduler: Why Job Priority Configuration Still Matters (2025-07-25)

The job priority system that was refined over years continues to be one of the most sophisticated features in Open Cluster Scheduler and its fully supported companion, Gridware Cluster Scheduler. Yet it's also one of the most underutilized capabilities in many HPC environments.

After working with countless Grid Engine deployments over the years, and now helping organizations transition to Open Cluster Scheduler and Gridware Cluster Scheduler, I've noticed that while administrators often get comfortable with basic queue configuration, they rarely explore the priority configuration mechanisms that can fundamentally change how their clusters serve organizational needs.

Why This Matters Now

In today's HPC landscape, compute resources aren't just expensive—they're strategic business assets. But it's not just about hardware costs; application license costs can easily exceed hardware expenses, with software licenses often running into hundreds of thousands of dollars or more annually. The difference between an optimally configured priority system and default settings can mean the difference between meeting critical deadlines and missing them entirely—while licensed applications sit idle.

The challenge isn't technical complexity. The real challenge is aligning technical capabilities with business requirements. We've seen environments where expensive GPU clusters and costly application licenses remain underutilized while urgent jobs wait in queue, simply because the priority system wasn't configured to reflect organizational priorities and license economics.

The Evolution Continues

What makes this discussion particularly timely is that good old Sun Grid Engine has found new life as Open Cluster Scheduler, with its fully supported companion, Gridware Cluster Scheduler. This isn't just a rebranding—it's a revitalization of the entire Grid Engine ecosystem for modern computing environments.

The priority system remains at the heart of both platforms, but now it's paired with active development, modern container integration, enhanced GPU support, and enterprise backing that organizations need for critical production deployments.

Dive Deeper

The priority system combines share trees, functional policies, override mechanisms, and urgency calculations through a sophisticated weighting system that creates dynamic fairness while ensuring important projects get the resources they need.

If you're ready to optimize your cluster's priority configuration, I've put together a guide that walks through the mathematical foundations, configuration examples, and real-world implementation strategies: Understanding Job Priority Configuration in Gridware Cluster Scheduler.

Quick & Dirty Open Cluster Scheduler 9.0.5 Install Script (2025-05-04)

Update (July 21, 2025): Newer versions are now available! You can install OCS 9.0.6 or 9.0.7 for testing using:

curl -s https://raw.githubusercontent.com/hpc-gridware/quickinstall/refs/heads/main/ocs.sh | OCS_VERSION=9.0.7 shIf you want to give Open Cluster Scheduler (OCS) 9.0.5 a quick spin without following the whole doc, I've built a simple shell installer. It's for single-node (qmaster/execd) setups. Feel free to add more execds later.

Heads up:

Don't expect this script to work on every distro or minimal OS install without a hitch. You might hit a missing package, lack of man pages, or a small OS quirk. If you run into trouble, please comment in the gist. If it works, give it a like!

How to quick-try (be sure to review the script first!):

curl -s https://gist.githubusercontent.com/dgruber/c880728f4002bfd6a0d360c7f6a27de1/raw/install_ocs_905.sh | sh

or

wget -O - https://gist.githubusercontent.shcom/dgruber/c880728f4002bfd6a0d360c7f6a27de1/raw/install_ocs_905.sh | sh

Again: Please check the script before you run it.

For a serious, production install (with full details and user setup), refer to the official documentation bundled in the OCS doc packages.

Podcast 2: Gridware Cluster Scheduler & Open Cluster Scheduler 9.0.5 (2025-04-16)

I'm excited to share our latest podcast, which explores the release of Gridware Cluster Scheduler 9.0.5 — built on the new Open Cluster Scheduler 9.0.5! Once again, I turned to NotebookLM to generate a dynamic conversation based entirely on our latest release notes and blog posts. In true Grid Engine tradition, I made sure to double-check everything for accuracy, and the result is a concise, informative episode that captures all the key improvements of this major release.

If you're interested in what's new in 9.0.5, how adopting the Open Cluster Scheduler as our foundation strengthens Gridware, or what this means for current and future users, I think you'll really enjoy this episode.

As always, let us know what you think. It’s inspiring to see how AI can help us tell our story even better—while ensuring the technical details are spot-on. Stay tuned for more updates from the Grid Engine and HPC community!

Blog Post About Efficient NVIDIA GPU Management in HPC and AI Workflows with Gridware Cluster Scheduler (2025-04-13)

Over at HPC Gridware I recently published a blog post highlighting how Gridware Cluster Scheduler (formerly known as "Grid Engine") can significantly simplify GPU management and maximize efficiency in HPC and AI environments.

In the post, we cover exciting new capabilities and improvements, including intelligent scheduling to ensure your valuable GPUs never stay idle, automated GPU setup with simple one-line prolog and epilog scripts, and comprehensive per-job GPU monitoring with detailed accounting metrics. We also walk through integrated support for NVIDIA’s latest ARM-based Grace Hopper and Grace Blackwell platforms, showcasing Gridware’s flexibility for modern hybrid compute clusters with mixed compute architectures.

Additionally, The article provides hands-on examples, such as running GROMACS workloads seamlessly on the new NVIDIA architecture, and integrating NVIDIA containers effortlessly using Enroot. To further improve visibility and operational efficiency, Gridware now supports exporting key GPU metrics to Grafana.

Interested in ensuring your GPUs are always working at full capacity while keeping management complexity at bay? Check out the full blog post on HPC Gridware for all the details!

Discover Valuable HPC / AI Cluster Insights in 5 Minutes Using qtelemetry (2025-02-16)

Observability in HPC and AI clusters continues to evolve, and we’re thrilled to introduce qtelemetry, now in developer preview. This new tool provides deep insight into your Gridware Cluster Scheduler (GCS) environment in just a few minutes. Best of all, it can even work with legacy Grid Engine clusters when needed.

Introducing qtelemetry

qtelemetry streamlines HPC observability by simplifying integrations with tools like Prometheus and Grafana. Key features include:

• Effortless time series integration: Quickly connect your cluster to Prometheus and Grafana.

• Customizable sample dashboards: Keep an eye on jobs overview, hosts overview, and queue metrics.

• Outlier detection: Instantly flag non-functional hosts or jobs stuck in an error state.

• Resource monitoring: Track host loads including CPU, memory, GPU availability, and custom resources.

• sge_qmaster supervision: Monitor critical daemons (e.g., qmaster CPU/memory usage) and spooling filesystem performance.

• Containerized observability stack: Integrates smoothly with container-based deployments for flexible HPC environments.

If you’re eager to try qtelemetry, simply contact us at HPC Gridware!

Seamless Integration with Prometheus & Grafana

Setting up observability for your HPC or AI clusters has never been simpler. qtelemetry lets you feed GCS metrics directly into Prometheus for comprehensive monitoring:

Connect to Prometheus: Collect crucial metrics (host load, memory usage, GPU availability, etc.) transparently in Prometheus.

Visualize with Grafana: Our sample dashboards offer quick snapshots of job states, host performance, and queue details. Customize them to suit your environment.

Alerting & Outlier Detection: Use Grafana’s alerting capabilities to stay on top of failed jobs, overloaded hosts, or daemon performance issues.

Sample Dashboard Examples

Our developer preview comes with sample dashboards to give you immediate visibility into essential HPC metrics:

Figure: A cluster global summary showing the amount of execution hosts, sockets, cores, compute threads, and NVIDIA GPUs available in the cluster along with an overview about running jobs per node.

Figure: Cluster global jobs overview including different states and the possibility to group by waiting time to easily detect if jobs get stuck.

Figure: A cluster queue slots summary view known from qstat -f.

Figure: Monitor CPU load, memory usage, and GPU availability for all hosts.

These intuitive dashboards ensure you can quickly identify non-responsive hosts or stuck jobs, helping your HPC cluster run smoothly.

Get Started with qtelemetry

Ready to unlock valuable cluster insights in just minutes? The qtelemetry developer preview is now available. Connect your Gridware Cluster Scheduler to Prometheus, load our pre-built Grafana dashboards, and begin monitoring your environment.

For additional information or to arrange a demo, please reach out to us at HPC Gridware. We’re excited to help you gain greater visibility into your HPC and AI workloads.

Happy monitoring!

Daniel

HPC Gridware

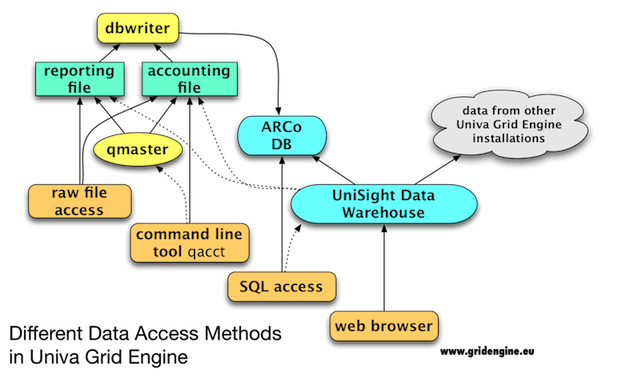

Introducing Flexible Accounting in Gridware Cluster Scheduler: Collect Arbitrary Job Metrics (2025-02-09)

Ever dreamed of capturing custom metrics for your jobs—like user-generated performance counters or application-specific usage data—directly in your accounting logs? Gridware Cluster Scheduler (and its open source companion, Open Cluster Scheduler) just made it a reality with brand-new “flexible accounting” capabilities.

Why Flexible Accounting Matters

In HPC environments, traditional accounting systems can be limiting. They typically capture CPU time, memory usage, perhaps GPU consumption—yet your workflow might demand more: e.g., analyzing model accuracy, network throughput, or domain-specific metrics. With Gridware’s flexible accounting, you can insert arbitrary fields into the system’s accounting file, just by placing a short epilog script in your queue configuration. Then, whenever you run qacct -j <job_id>, these additional metrics appear neatly alongside standard resource usage.

How It Works

In essence, the cluster scheduler calls a admin-defined epilog after each job completes. This small script (it can be in Go, Python, or any language you like) appends as many numeric "key-value" pairs as you wish to the scheduler’s accounting file. For example, you might measure data from your application’s logs (say, images processed or inference accuracy), then push those numbers right into the accounting system. The code snippet below (in Go) demonstrates how easily you can add random metrics—just replace them with values drawn from your own logic:

package main

import (

"fmt"

"github.com/hpc-gridware/go-clusterscheduler/pkg/accounting"

"golang.org/x/exp/rand"

)

func main() {

usageFilePath, err := accounting.GetUsageFilePath()

if err != nil {

fmt.Printf("Failed to get usage file path: %vn", err)

return

}

err = accounting.AppendToAccounting(usageFilePath, []accounting.Record{

{

AccountingKey: "tst_random1",

AccountingValue: rand.Intn(1000),

},

{

AccountingKey: "tst_random2",

AccountingValue: rand.Intn(1000),

},

})

if err != nil {

fmt.Printf("Failed to append to accounting: %vn", err)

}

}

Once you have defined your epilog you need to configure it in the cluster queue configuration (qconf -mq all.q).

epilog sgeadmin@/path/to/flexibleaccountingepilog

Here the sgeadmin is the installation user of Gridware / Open Cluster Scheduler as he has the right permissions to do that.

Finally accepting the new values in that particular format in the system needs to be enabled globally (qconf -mconf).

reporting_params ... usage_patterns=test:tst*

Here we allow tst prefixed values which are then stored in the internal JSONL accounting file in the "test" sections.

That’s all—no core scheduler modifications needed. Run your jobs normally, let them finish, then check out your new columns in qacct.

Unlocking More Insights

This feature is particularly powerful for HPC clusters applying advanced analytics. Need to track per-user image accuracy scores or data ingestion rates? Or capture domain-specific variables for auditing and compliance? Flexible accounting provides a simple, robust mechanism to store all that data consistently.

And remember: Open Cluster Scheduler users get these same advantages—just take care of a little manual configuration. Because this functionality is unique to Gridware and Open Cluster Scheduler, you won’t find it in other legacy Grid Engine variants.

Conclusion

Spend less time mashing logs together and more time exploring richer cluster usage data. Flexible accounting transforms ordinary HPC accounting into a full-blown, customizable metrics infrastructure. Whether you’re fine-tuning AI workflows or verifying compliance, you now have the freedom to store precisely the information you need—right where you expect to see it.

Gridware Cluster Scheduler Upgrades: Faster, Smarter, and Ready for AI Workloads (2025-01-20)

If you’re managing HPC or AI clusters with Grid Engine, scalability is probably your daily obsession. As core counts explode and workloads grow more complex, the Gridware Cluster Scheduler (GCS) just leveled up to keep pace—and here’s why it matters to you.

What’s Changed?

We’ve rebuilt core parts of GCS to tackle bottlenecks head-on. Let’s break down what’s new:

1. Self-Sustaining Data Stores

- Problem: The old monolithic data store couldn’t handle parallel requests efficiently. Think authentication delays or

qstatqueries clogging the system. - Fix: We split the data store into smaller, independent components. For example, authentication now runs in its own thread pool, fully parallelized. No more waiting for the main scheduler to free up.

- Result: Need to submit 50% more jobs per second? Done. Query job status (

qstat -j), hosts (qhost), or resources (qstat -F) 2.5x faster? Check.

2. Cascaded Thread Pools

- How it Works: Tasks are split into sub-tasks, each handled by dedicated thread pools. Think of it like assembly lines for requests—auth, job queries, node reporting—all running in parallel.

- Why It Matters: Even under heavy load, GCS now processes more requests without choking. We measured 25% faster job runtimes in tests, even with heavier submit rates.

3. No More Session Headaches

- Old Pain: Ever had a job submission finish but

qstatnot see it immediately? Traditional WLMs make you manage sessions manually. - New Fix: GCS auto-creates cross-host sessions. Submit a job, query it right after—no extra steps. Consistency without the fuss.

Why AI/ML Clusters Win Here

AI workloads aren’t just about GPUs—they demand massive parallel job submissions, rapid status checks, and resource juggling. These upgrades mean:

- Faster job throughput: Submit more training jobs without queue lag.

- Instant resource visibility:

qstat -Forqconfqueries won’t slow down your workflow. - Scalability: Handle thousands of nodes reporting status (thanks,

sge_execd!) without bottlenecking the scheduler.

What’s Next?

We’re eyeing predictive resource scheduling (think ML-driven job forecasting) and better GPU/CPU hybrid support. But today’s updates already make GCS a reliable solutions for modern clusters.

Try It Yourself

The Open Cluster Scheduler code is on GitHub, with prebuilt packages for:

- Linux:

lx-amd64,lx-arm64,lx-riscv64,lx-ppc64le,lx-s390x - BSD:

fbsd-amd64 - Solaris:

sol-amd64 - Legacy Linux:

ulx-amd64,xlx-amd64

Download Links:

For Grid Engine users, this isn’t just an upgrade—it’s a toolkit built for the scale AI and HPC demand. Test it, push it, and let us know how it runs on your cluster.

Dive deeper into the technical details here.

Questions or feedback? Reach out—we’re all about making Grid Engine work harder for you.

Mastering GPUs with Open Cluster Scheduler (2024-07-1)

Mastering GPUs with Open Cluster Scheduler's RSMAP

Check out the full article here.

Unlock the full potential of your GPU resources with Open Cluster Scheduler's latest feature — Resource Map (RSMAP). This powerful and flexible resource type ensures efficient and conflict-free utilization of specific resource instances, such as GPUs.

Why Choose RSMAP?

- Collision-Free Use: Ensures exclusive access to resources, preventing conflicts between jobs.

- Detailed Monitoring & Accounting: Facilitates precise tracking and reporting of actual resource usage.

- Versatile Resource Management:

- Host-Level Resources: Manage local resources such as port numbers, GPUs, NUMA resources, network devices, and storage devices.

- Global Resources: Manage network-wide resources like IP addresses, DNS names, license servers, and port numbers.

Example: Efficient GPU Management

Define Your GPU Resource: Begin by opening the resource complex configuration using

qconf -mcand add the following line:GPU gpu RSMAP <= YES YES NONE 0This defines a resource named

GPUusing the RSMAP type, marking it as requestable and consumable with specific constraints.Initialize Resource on Hosts: Assign values to the GPU resources on a specific host by modifying the host configuration with

qconf -me <hostname>. For a host with 4 GPUs:complex_values GPU=4(0 1 2 3)This indicates the host has 4 GPU instances with IDs 0, 1, 2, and 3.

Submit Your Job: Request GPU resources in your job script:

#!/bin/bash env | grep SGE_HGRSubmit the job with the command:

qsub -l GPU=2 ./job.shYour job will now be allocated the requested GPU resources, which can be confirmed by checking the output for granted GPU IDs. Convert these IDs for use with NVIDIA jobs:

export CUDA_VISIBLE_DEVICES=$(echo $SGE_HGR_GPU | tr ' ' ',')

This innovative approach to resource management enhances both performance and resource tracking, making it a must-have for efficient computing. Plus, HPC Gridware set to release a new GPU package featuring streamlined configuration, improved GPU accounting, and automated environment variable management, taking the hassle out of GPU cluster management.

For more detailed information, check out the full article here. It's your go-to guide for mastering GPU management with the Open Cluster Scheduler!

Kubernetes Topology Manager vs. HPC Workload Manager (2020-04-15)

Looks like another gap between Kubernetes and traditional HPC scheduler is solved: Kubernetes 1.18 enables a new feature called Topology Manager.

That’s very interesting for HPC workload since the process placements on NUMA machines have a high impact for compute, IO, and memory intensive applications. The reasons are well known:

- access latency differs - well, it is NUMA (non-uniform memory access)!

- when processes move between cores and chips, caches needs to be refilled (getting cold)

- not just memory, also device access (like GPUs, network etc.) is non-uniform

- device access needs to be managed (process which runs on a core needs should access a GPU which is close to the CPU core)

- when having a distributed memory job all parts (ranks) should run with the same speed

In order to get the best performance out of NUMA hardware, Sun Grid Engine introduced around eleven years ago a feature which lets processes get pinned to cores. There are many articles in my blog about that. At Univa we heavily improved it and created a new resource type called RSMAP which combines core binding with resource selection, i.e. when requesting a GPU or something else, the process gets automatically bound to cores or NUMA nodes which are associated with the resource (that’s called RSMAP topology masks). Additionally the decision logic was moved away from the execution host (execution daemon) to the central scheduler as it turned out to be necessary for making global decisions for a job and not wasting time and resources for continued rescheduling when local resources don’t fit.

Back to Kubernetes.

The new Kubernetes Topology Manager is a component of Kubelet which also takes care about these NUMA optimizations. That it is integrated in Kubelet, which runs locally on the target host is the first thing to note.

As Topology Manager provides a host local logic (like in Grid Engine at the beginning) it can: a.) make only host local decisions and b.) lead to wasted resources when pods needs to be re-scheduled many times to find a host which fits (if there is any). That’s also described in the release notes as a known limitation.

How does the Topology Manager work?

The Topology Manager provides following allocation policies: none, best-effort, restricted, and single-numa-node. These are kubelet flags to be set.

The actual policy which is applied to the pod depends on the kubelet flag but also on the QoS class of the pod itself. The QoS class of the pod depends on the resource setting of the pod description. If cpu and memory are requested in the same way within the limits and requests section then the QoS class is Guaranteed. Other QoS classes are BestEffort and Burstable.

The kubelet calls so called Hint Providers for each container of the pod and then aligns them with the selected policy, like checking if it works well with single-numa-node policy. When set to restricted or single-numa-node it may terminate the pod when no placement if found so that the pod can be handled by external means (like rescheduling the pod). But I’m wondering if that will work when having side-car like setups inside the pod.

The sources of the Topology Manager are well arranged here: https://github.com/kubernetes/kubernetes/blob/master/pkg/kubelet/cm/topologymanager

In order to summarize. A bit complicated since I can’t really find the decision logic of the hint provider documented and so the source code will be the source of truth. Also, since it is a host local decision it will be pain for distributed memory jobs (like MPI jobs). Maybe they move the code one day to the scheduler, like it was done in Grid Engine. It is really good that Kubernetes takes now also care about those very specific requirements. So, it is still on the right way to catch up with all required features for traditional HPC applications. Great!

At UberCloud we closely track Kubernetes as platform for HPC workload and are really happy to see developments like the Topology Manager.

Using Open Containers with runc in a Univa Grid Engine Compute Cluster (2015-06-28)

runc is a tool written in Go which is creating and starting up a Linux container according to the OCF specification. Its source code repository can be found here.

If you have a Go development environment then building it is very simple - just follow the instructions in the README (they are using a Makefile which internally calls godep, the standard tool for handling package dependencies in Go / probably you need to install it as well).

After installing the single runc binary you are able to startup containers right on the command line by pointing runc to a JSON description of the container. The container itself obviously also needs to be on the file system in order to chroot to it (which is done by runc). One major difference to Docker itself is that it does not do any kind of image management, but probably this is not required in case you have a good shared filesystem.

How to use runc in Univa Grid Engine

After runc is verified to run on command line it is time to use it under the control of Univa Grid Engine in order to exploit your compute clusters resources.

The integration can be very straight forward depended in what you want to achieve. I keep it here as simple as possible.

First of all you want to submit the container described by the Open Container Format (OCF) as JSON description to Univa Grid Engine and probably also use the resource management system of Grid Engine for handling cgroups and other limitations. This is possible since all container processes are children of runc - no daemon here is in play.

In order to setup running runc you can override the starter_method in the Univa Grid Engine queue configuration (qconf -mq all.q for example). The starter method is executed on the compute node in oder to start up the job. Unfortunately runc requires root privileges and the starter method is started as user. Therefore a sudo is required. Note that running a privileged process is always a security risk, but I’m always fearless on my private cluster on my laptop!!!

Point the starter_method (in the queue configuration like qconf -mq all.q) to the path where you have following script:

#!/bin/sh

sudo /usr/local/bin/runc --id "$JOB_ID" $@

Depending of your use case you need to allow that runc can run as root without requiring a password. This would be required in any case when running in batch mode.

Example using visudo:

daniel ALL=(ALL) NOPASSWD: /usr/local/bin/runc

You can also use user groups instead of specifying users.

A quick check:

$ sudo runc

JSON specification file for container.json not found

Switching to a directory where I have a container.json :

$ sudo runc

/ $ exit

Now, lets submit an interactive job. The pty switch is required when submitting a JSON file but requiring a shell.

I’m using the busy box image which is the example of runc README on github.

$ ls

busybox.tar container.json rootfs runc

Now I want to run the container on my cluster using a my shared filesystem.

$ qrsh -pty y -cwd container.json

/ $ ps -ef

PID USER COMMAND

1 daemon sh

7 daemon ps -ef

/ $ ls -lsiah

total 56

1999621 4 drwxr-xr-x 17 default default 4.0K Jun 23 05:41 .

1999621 4 drwxr-xr-x 17 default default 4.0K Jun 23 05:41 ..

1992545 0 -rwxr-xr-x 1 default default 0 Jun 27 15:31 .dockerenv

1992546 0 -rwxr-xr-x 1 default default 0 Jun 27 15:31 .dockerinit

2007813 4 drwxr-xr-x 2 default default 4.0K May 22 2014 bin

846097 0 drwxr-xr-x 5 root root 360 Jun 28 06:42 dev

2024173 4 drwxr-xr-x 6 default default 4.0K Jun 27 15:31 etc

2024184 4 drwxr-xr-x 4 default default 4.0K May 22 2014 home

2024187 4 drwxr-xr-x 2 default default 4.0K May 22 2014 lib

1992672 0 lrwxrwxrwx 1 default default 3 May 22 2014 lib64 -> lib

1992673 0 lrwxrwxrwx 1 default default 11 May 22 2014 linuxrc -> bin/busybox

…

/ $ exit

The PTY request is required otherwise we don’t get the interactive shell together with the command (in our case it is the JSON file). The -cwd argument specifies that the runc is executed in the current directory which removes the need for specifying the full path to the JSON file.

Now we want to run a batch job using this busy box container. Let’s assume our task is executing whoami in the container. You need to create a new JSON file with a different processes section:

5 "processes": [

6 {

7 "tty": false,

8 "user": "root",

9 "args": [

10 "whoami"

11 ],

12 "env": [

13 "PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

14 "TERM=xterm"

15 ],

16 "cwd": ""

17 }

So, tty is set to false. Saving that copy as run.json execution works like following:

$ qsub -cwd -b y ./run.json

Your job 3000000178 ("run.json") has been submitted

$ cat run.json.o3000000178

root

This is just a start. There are many features you can exploit.

This approach works well with Univa Grid Engine's cgroups integration. You can specify the amount of cores allocated for cpuset, main memory limit, and virtual memory limit for example:

$ qsub -binding linear:1 -l m_mem_free=2G,h_vmem=3G -cwd -b y ./run.json

Now the container is under complete control of Univa Grid Engine’s cgroups when it comes to cpu usage and memory usage.

Other possibilities include checkpointing integration with criu. You also need to align requests for Grid Engine with the JSON file which can be done certainly with a JSV script. Also with sudoers file you can limit which containers are allowed to be executed for which user or user group. This can be translated easily to Univa Grid Engine’s job class functionality making usage of containers save and very simple for the user.

Daniel

Grid Engine and Multitenancy (2015-02-22)

Univa Grid Engine can run any workload in your compute cluster. Hence Univa Grid Engine can also easily run Grid Engine as a job. This could be probably required if you want to strictly isolate temporary Grid Engine installations from each other or just want to have some fun with your own scheduler within the scheduler.

To reduce the word count in the article let’s just go through this learning task by using the free Univa Grid Engine trial version which you can download here and follow the recipe in the next sections.

Setup the Grid Engine Demo Enviornment

To start from scratch we need to first setup a demo environment. This is described in an earlier article on my blog. The only requirements are having free Virtual Box and and free Vagrant installed. Then follow the blog for starting it up (copy the demo tar.gz in the directory and do vagrant up - version 8.2.0-demo is required here - for another version you need to adapt). Now you should have a 3 node cluster (3 VMs) running the Univa Grid Engine cluster scheduler.

Next you need to log in (password for vagrant is always vagrant, also for root):

vagrant ssh master

[vagrant@master vagrant]$ qhost

HOSTNAME ARCH NCPU NSOC NCOR NTHR NLOAD MEMTOT MEMUSE SWAPTO SWAPUS

----------------------------------------------------------------------------------------------

global - - - - - - - - - -

execd1 lx-amd64 4 1 4 4 0.01 490.0M 89.5M 928.0M 0.0

execd2 lx-amd64 4 1 4 4 0.01 490.0M 88.8M 928.0M 0.0

master lx-amd64 4 1 4 4 0.05 490.0M 104.3M 928.0M 356.0K

You see 3 compute nodes.

Configure Grid Engine Resources

For running UGE as a job, UGE needs to bootstrap itself. This is an easy task since you can setup a UGE cluster with just one command and a configuration file. The critical point here is the configuration file, since it needs to be adapted depending on which hosts and resources were selected for UGE as a job.

Let’s look at the sample config file (which was used to setup our virtual Grid Engine cluster) here.

There are a few setting we need to adapt dynamically after our UGE was scheduled:

- SGE_ROOT: Of course each UGE needs a different root directory. It must be shared by all nodes which are part of the job / UGE installation.

- SGE_QMASTER_PORT: Needs to be unique as well

- SGE_EXECD_PORT: Also UGE needs to make a unique selection

- SGE_CLUSTER_NAME: Can be derived from the qmaster port.

- GID_RANGE: No overlapping gid range! Otherwise you can cause severe issues in accounting. Let UGE select one for you.

- QMASTER_SPOOL_DIR: Dependent from the SGE_ROOT

- EXEC / SUBMIT / ADMIN_HOST_LIST: All hosts which were selected from UGE

Also note that we are running the job not as root, hence we must disable init script creation. If you want to have a multi-user cluster in this Grid Engine job, than you need to setup the cluster as root.

There are a lot of unique things to select. But this is very easy to configure with the RSMAP resource type in Univa Grid Engine.

Here a sample configuration script which installs these resources in UGE:

#!/bin/sh

qconf -sc > $$.tmp

echo "MT_SGE_ROOT MT_SGE_ROOT RSMAP <= YES JOB 0 0" >> $$.tmp

echo "MT_QMASTER_PORT MT_QMASTER_PORT RSMAP <= YES JOB 0 0" >> $$.tmp

echo "MT_GID_RANGE MT_GID_RANGE RSMAP <= YES JOB 0 0" >> $$.tmp

echo "MT_EXEC_SPOOL MT_EXEC_SPOOL RSMAP <= YES JOB 0 0" >> $$.tmp

qconf -Mc $$.tmp

rm $$.tmp

Just copy and paste that in an editor (vi?) on the master host und run it.

[vagrant@master vagrant]$ vi config.sh

[vagrant@master vagrant]$ ./config.sh

vagrant@master added "MT_SGE_ROOT" to complex entry list

vagrant@master added "MT_QMASTER_PORT" to complex entry list

vagrant@master added "MT_GID_RANGE" to complex entry list

Since three clusters a enough on three virtual hosts to play with, we add now three resources for each type.

[vagrant@master vagrant]$ qconf -mattr exechost complex_values "MT_SGE_ROOT=3(cluster1 cluster2 cluster3)" global

"complex_values" of "exechost" is empty - Adding new element(s).

vagrant@master modified "global" in exechost list

These are our $SGE_ROOT directories. They will appear as subdirectories under /vagrant since it is shared already.

$ qconf -mattr exechost complex_values "MT_QMASTER_PORT=3(2324 2326 2328)" global

These are the portnumbers the UGE installation gets. Note that the execd port will be just qmaster + 1 as this is default convention.

$ qconf -mattr exechost complex_values "MT_GID_RANGE=3(22000 23000 24000)" global

The GID ranges are quite important, please don’t create a conflict with the main UGE installation.

The execd spool directories which needs to exist before a job runs also needs to be selected. (I put that in the Vagrant installation)

$ qconf -mattr exechost complex_values "MT_EXEC_SPOOL=3(local1 local2 local3)" global

That’s it from the main configuration part. The hostnames are dynamically requested and derived from Grid Engine (they will appear in the $PE_HOSTFILE on the master tasks host).

The only thing left is adding a parallel environment so that you can request multiple hosts for your cluster:

$ qconf -ap uge

pe_name uge

slots 1000

user_lists NONE

xuser_lists NONE

start_proc_args NONE

stop_proc_args NONE

allocation_rule 1

control_slaves FALSE

job_is_first_task TRUE

urgency_slots min

accounting_summary FALSE

daemon_forks_slaves FALSE

master_forks_slaves FALSE

Then save…^ZZ

vagrant@master added "uge" to parallel environment list

Allocation rule 1 here means that you basically match execds for your inner UGE installation to slots.

Add it to the all.q:

$ qconf -aattr queue pe_list uge all.q

vagrant@master modified "all.q" in cluster queue list

Finished!

Writing the Job that Sets Your Cluster Up

Here is our job script (job.sh):

Make it executable:

$ chmod +x job.sh

Now submit this script which sets up a user define Grid Engine installation with 2 execution hosts (UGE 2).

$ qsub -N cluster -o /vagrant/UGE/job1.log -j y

-l MT_SGE_ROOT=1,MT_QMASTER_PORT=1,MT_GID_RANGE=1,MT_EXEC_SPOOL=1

-pe uge 2 ./job.sh

With qstat you can see it running:

$ qstat -g t

job-ID prior name user state submit/start at queue jclass master ja-task-ID

-------------------------------------------------------------------------------------------------------------------------------------------------

1 0.55500 cluster vagrant r 02/21/2015 19:00:40 all.q@execd1 MASTER

1 0.55500 cluster vagrant r 02/21/2015 19:00:40 all.q@execd2 SLAVE

With qstat -j 1 you can see some interesting details about the new cluster within you normal cluster:

context: STATUS=RUNNING,SETTINGS=/vagrant/cluster1

binding: NONE

mbind: NONE

submit_cmd: qsub -N cluster -o /vagrant/UGE/job.log -j y -l MT_SGE_ROOT=1,MT_QMASTER_PORT=1,MT_GID_RANGE=1,MT_EXEC_SPOOL=1 -pe uge 2 ./job.sh

granted_license 1:

usage 1: cpu=00:00:00, mem=0.00000 GBs, io=0.00000, vmem=N/A, maxvmem=N/A

resource map 1: MT_EXEC_SPOOL=global=(local1), MT_GID_RANGE=global=(22000), MT_QMASTER_PORT=global=(2324), MT_SGE_ROOT=global=(cluster1)

On the resource map entry you can see what the Univa Grid Engine scheduler has selected (port number / cluster name / spooling directories). Also see STATUS=RUNNING in the context - this appears after the installation performed from the job. The settings points to the installation directory, this is used in order to access the new cluster. But first we schedule another cluster, we have one host left.

$ qsub -N cluster -o /vagrant/UGE/job2.log -j y

-l MT_SGE_ROOT=1,MT_QMASTER_PORT=1,MT_GID_RANGE=1,MT_EXEC_SPOOL=1

-pe uge 1 ./job.sh

This job is running as well. Wenn submitting a third, this one stays pending of course since we are using already all hosts (of course you can configure oversubscription).

Now through the SETTINGS information from the job context we are accessing our first inner cluster:

source /vagrant/cluster1/default/common/settings.sh

Doing a qhost gives us two hosts, since we requested two:

[vagrant@master ~]$ qhost

HOSTNAME ARCH NCPU NSOC NCOR NTHR NLOAD MEMTOT MEMUSE SWAPTO SWAPUS

----------------------------------------------------------------------------------------------

global - - - - - - - - - -

execd2 lx-amd64 4 1 4 4 0.02 490.0M 102.5M 928.0M 0.0

master lx-amd64 4 1 4 4 0.04 490.0M 124.5M 928.0M 472.0K

Now you can start jobs...

[vagrant@master ~]$ qsub -b y sleep 1234

...doing qstat and qacct etc.

Finally you can go back to your "real" cluster with

[vagrant@master ~]$ source /vagrant/UGE/default/common/settings.sh

Where you only can see your two jobs which sets up UGE clusters.

When you want to delete a cluster, just use qdel.

There is one task left, cleaning up the directories after a cluster as a job finished. It is up to the reader to implement that (using an epilog script?), I just wanted to show here how easy it is to run Univa Grid Engine under Grid Engine. As we have seen the RSMAP resource type (here used as global per job consumable) is a great help here for selecting ports etc.

Also consider to startup the DRMAA2 d2proxy in the newly created cluster in order to access it from home (with ssh tunneling) or use it as a simple multi-cluster access toolkit (which now supports file staging as well).

Further more advanced considerations: start execds with qrsh -inherit (export SGE_ND=1 might help here) for full accounting and process reaping, doing cleanup of directories after the cluster job finished, using transfer queues to forward jobs from original cluster to inner cluster, adding load sensors for tracking usage of inner cluster from main cluster and remove cluster when unutilized, spawn more inner execds with additional job types...

Grid Engine Webinars 2014 (2015-01-05)

At Univa we hold 2014 several webinars about Univa Grid Engine and related topics. They contain lots of useful technical information also for open source Grid Engine users. The videos can be downloaded for free from the Univa homepage.

Here is a list with the links:

| Webinar | Link |

|---|---|

| Univa Grid Engine 8.2 | http://www.univa.com/resources/webinar-grid-engine-820.php |

| Upgrading to Univa Grid Engine | http://www.univa.com/resources/webinar-upgrading-to-820.php |

| Nvidia GPUs and Intel Co-processors | http://www.univa.com/resources/webinar-coprocessors.php |

| UniSight | http://www.univa.com/resources/webinar-unisight.php |

| License Orchestrator | http://www.univa.com/resources/webinar-license-orchestrator.php |

| UniCloud | http://www.univa.com/resources/webinar-unicloud.php |

| Read Only Qmaster Thread & Resource Quota Sets | http://www.univa.com/resources/webinar-rqs.php |

| CGroups | http://www.univa.com/resources/webinar-cgroups.php |

| DRMAA2 API | http://www.univa.com/resources/webinar-drmaav2.php |

| Upgrading to Univa Grid Engine | http://www.univa.com/resources/webinar-upgrading.php |

Finding all host groups in which a Grid Engine host is contained (2014-12-09)

There is no direct way in Grid Engine for getting all host groups in which one particular host is part of. But Grid Engine offers a mighty command qconf -shgrp_resolved which returns all hosts of a hostgroup (also when the hostgroup itself contains other hostgroups recursively).

Following simple shell script returns all hostgroups of which the host given as first parameter to the script is part of.

#!/bin/sh

host=$1

for hgrp in `qconf -shgrpl`; do

for h in `qconf -shgrp_resolved $hgrp`; do

if [ "$h" = "$host" ]; then

echo $hgrp

fi

done

done

Update: Alexandru's one liner for this task is easier to setup as an simple alias (thanks for the hint).

#!/bin/bash

for aa in $(qconf -shgrpl) ; do qconf -shgrp $aa | grep -q $1 && echo $aa ; done

The Univa Grid Engine Scheduler Configuration: Why artificial load settings matters (2014-11-30)

Grid Engine's scheduler configuration is very flexible. But unfortunately flexibility often comes with interdependencies and hence increases complexity.

In this article I want to have a look at one particular feature of Grid Engine: The artificial load functionality - the job load adjustments.

What’s artificial load?

Load based scheduling is very common, administrators often want to schedule jobs to the least loaded hosts. Hence this is configured in the default scheduler configuration of Grid Engine (man sched_conf -> queue_sort_method).

But what should be considered as "load"? Usually it is the operating system load measurement (man uptime), the 5 min. load average (load_avg) divided by the number of cores (processors) on the host (= np_load_avg). In Grid Engine the load can be set in the load_formula (qconf -msconf) when load based scheduling is turned on in the queue_sort_method (set to load).

As you can see the default load comes from the compute nodes and are reported in larger intervals (configurable in the load_report_time) to the master process and hence the scheduler.

Let's assume you have 48 core machines in your cluster. Each host is allowed to run 48 single-threaded jobs in parallel. The cluster is empty.

Now users are submitting dozens of jobs - what happens in the scheduler?

The scheduler sorts the hosts (queue instances) by the load and starts to schedule the job with the highest priority to the host with the lowest load. Then it takes the second job in priority order to schedule it also the the host with the lowest load. When you look at your watch the scheduling of the second job is around a ms later hence the load situation of the hosts didn’t change (anyhow the scheduler wouldn’t get the information during a scheduling run). What now happens is that the first 48 jobs are filling up the least loaded host.

This is not optimal. In order to approach the problem the Univa Grid Engine scheduler offers an artificial load functionality. Each time the scheduler schedules a job it makes load adjustments to the selected hosts.

The amount and time the artificial load remains on the host is configurable in the job_load_adjustments and load_adjustment_decay_time (man sched_conf).

When np_load_avg=0.5 is set in job_load_adjustments then this means following: Each granted slot for a scheduled job adds an artificial load of 0.5 devided by number of processors of host to np_load_avg. This division is counterintuitive since np_load_avg is already normalized by processors. Nevertheless the bevavior the admin wants to have since the load is finally depended from the machine on which it is scheduled. On a host with 2 processors the same job adds half the load to np_load_avg than on a compute node with 1 processor.

When active the scheduling behavior is like this: Searching least loaded host, trying to accommodate the job, add job_load_adjustments to host load, search least loaded host, …. Now our 48 jobs are distributed around the least loaded hosts of the cluster - excellent!

A connected secret

Univa Grid Engine has another parameter, which is part of the queue configuration, where Grid Engine puts overloaded hosts into an alarm state (man queue_conf): load_thresholds.

When the load + artificial load exceeds the load_threshold then the scheduler will consider this queue instances as overloaded and will not scheduler any jobs to those hosts! Since all this happens in the scheduler it is not visible for you as alarm state (which is a state where a host does not accept any new jobs).

Now let’s configure a load_adjustment of np_load_avg=1 and assume having just one host with 16 slots but 8 cores. In the queue configuration set the load_threshold to 1.0.

After submitting 16 jobs you will see that only 8 jobs are placed on the host - the other jobs a staying pending (and you don’t see any host in alarm state)!

Well, the issue relaxes over time since the load adjustment finally goes away.

But this is really something to have in mind when oversubscribing hosts: Adapt the load_adjustment to your needs! Meaning when having 48 cores und single threaded short jobs you need to drastically reduce the load_adjustment (probably just setting it to 0.01) for np_load_avg. Alternatively you can increase the load_thresholds of your queues. This gives you what you want - optimal placement and usage of your cluster.

To summarize there are two key messages:

- Artificial load helps you to let the jobs be placed optimally in situations of cluster under utilization.

- When using artificial load on oversubscribed hosts, please think about your load_threshold settings in the queues depending on the amount of jobs running and the load_adjustment setting.

Try yourself! There are some nice scheduling settings possible with the load threshold. Like if you want to schedule just max. one job to certain hosts in one scheduling run. This you can activate by setting the load adjustment time decay time to a very short time (1 second) and increase the load adjustment to a very large value.

Univa Grid Engine 8.2 Released (2014-09-16)

A little bit late with my blog entry, I’m happy to announce the availability of a new major version update of Grid Engine: Univa Grid Engine 8.2

There are too many improvements to handle them all in a short blog entry like this. Also lots of changes were done under the hood: new thread pool which handles qstat / qhost request, made code compatible with Windows, etc.

The new reader thread pool leads to very good responsiveness also in busy, huge clusters. The amount of threads serving status requests can be increased up to 64 threads. This thread pool works independent from the qmaster hence it removes lots of load from it.

The Windows support is a major step for simplifying environments where besides the Linux HPC cluster also Windows hosts are integrated, for job submission, or job execution. Unlike before there is no additional Unix emulation layer required anymore. The commands are just running natively on Windows command line.

Besides these changes we introduced also lot’s of functionality which helps the admin in their daily live. Resource reservation is now completely transparent and even shown in the qstat output. Another milestone and a major differentiator to the open source Grid Engine version is the improved support for parallel jobs. Before there was no means to reliable control resource limitations for parallel jobs which are distributed unevenly (like using round robin of fill up slot allocation strategy) since different parallel jobs started up the tasks (or ranks) in different ways. Like one makes one connection to an compute node and forks then the tasks, but others creating connections for each task / rank granted on a compute node. This can now be set up in the parallel environment. Hence the user can be sure that the requested limit (like main memory limit) is always set in the same way.

There are too many other changes to cover them all: clock resolution is now everywhere in milliseconds, the maximum job id is configurable and can now be up to 4 billion, qstat / qacct show now the exact submission command line, and so on.

When it comes to integrate Univa Grid Engine in other systems, we have now support for the Cray XC30 systems, i.e. those clusters can easily be integrated in existing Univa Grid Engine clusters or upgraded with Univa Grid Engine to have superior job scheduling capabilities when managing jobs. We also include HP Insight CMU 7.2 (and higher) support with Univa Grid Engine 8.2 (was available separately before), finally we ship a beta version of a new C API for job submission, job workflow management, and cluster monitoring based on the open DRMAA2 standard.

Please check the release notes for more detailed information.

Installing a Univa Grid Engine Demo Cluster with One Command (2014-07-13)

Univa provides demo packages for Univa Grid Engine for free. The only restriction is that those packages have a built-in limitation to handle a cluster with 48 cores at maximum. But this should be sufficient to test certain functionality or try out and learn the Grid Engine cluster scheduler in general.

But when you just want to try out Grid Engine on your laptop or desktop you probably don’t want to go the way of doing an own installation, you just want a test-bed for trying Grid Engine commands out.

I played a bit with Vagrant, which is a nice tool for creating Virtual Box machines automatically and hacked a script which installs Grid Engine on 3 virtual nodes completely automatically.

Here are the prerequisites:

- Having you own laptop / desktop with MacOS X / Linux (untested) or Windows (untested) running

- Having free VirtualBox installed

- Having free Vagrant installed

- Having git version management installed (or alternative you can copy the files from my github account manually)

- Having free Grid Engine tar.gz packages (I assume here ge-8.1.5-demo-common.tar.gz and ge-8.1.5-demo-bin-lx-amd64.tar.gz which you can get here for free)

Go to command line and create a directory in your home folder and clone in a directory containing all files needed for Vagrant to do its job:

# git clone https://github.com/dgruber/vagrantGridEngine.git

or alternatively copy all files from my github account (https://github.com/dgruber/vagrantGridEngine/archive/master.zip) into an empty subdirectory.

Then copy the tar.gz from Univa (expected 8.1.5) into the same directory where Vagrantfile is.

It should look like this:

Centenario:test daniel$ ls -l

-rw-r--r-- 1 daniel staff 5724 13 Jul 13:42 Vagrantfile

-rw------- 1 daniel staff 1610 13 Jul 14:04 authorized_keys

-rw-r--r-- 1 daniel staff 1436 13 Jul 16:40 auto_install_template

-rw-r--r-- 1 daniel staff 32097445 13 Jul 11:10 ge-8.1.5-demo-bin-lx-amd64.tar.gz

-rw-r--r-- 1 daniel staff 3332163 13 Jul 11:10 ge-8.1.5-demo-common.tar.gz

-rwxrwxr-x 1 daniel staff 1161 13 Jul 16:44 hostnames.sh

-rw------- 1 daniel staff 1679 13 Jul 12:28 id_rsa

-rw-r--r-- 1 daniel staff 396 13 Jul 12:28 id_rsa.pub

-rwxr-xr-x 1 daniel staff 786 13 Jul 16:24 installation.sh

-rw-r--r-- 1 daniel staff 1970 13 Jul 16:00 known_hosts

-rw-r--r-- 1 daniel staff 74 13 Jul 12:19 start_uge.sh

If you have this, all what is required to get your Univa Grid Engine 8.1.5 demo cluster running is to perform one command in this directory:

vagrant up

This will take a while at the very first time, because it needs to download a so called „box“ (a CentOS 6.5 image ready for Vagrant) from the Vagrant repository. This needs to be done just one time.

Now all 3 virtual machines are installed in VirtualBox automatically and are going to be configured. In order to use your cluster you can do an ssh to the Grid Engine master host:

vagrant ssh master

Then you can play with Grid Engine (if Grid Engine commands are not available you need to do that first: source /vagrant/UGE/default/common/settings.sh):

qhost

qstat -f

qsub -b y sleep 123

qstat -f

qstat -j <jobid>

If you want to shut the cluster down and remove all occurrences of it then you can logout and do a

vagrant destroy

or

vagrant destroy -f

in the same directory where you called vagrant up. That’s it. If you have any problems or enhancements, please use my github repository for reporting.

Cheers

Note that the root password is vagrant. If you have different versions of Grid Engine packages just have a look in the installation.sh and adapt the package names. Be aware that the packages must be in the directory or a subdirectory.

HEPiX 2014 Slides about cgroup Integration in Univa Grid Engine (2014-06-13)

You can find my slides from the HEPiX 2014 conference about the cgroup integration in Univa Grid Engine here.

Client Side JSV Performance Comparison - And the Winner is: Go (#golang) ;-) (2014-03-15)

With JSV scripts you can verify and change your job submission parameters. Depending on the called functions you can also reject jobs which does not follow rules you specify in the script. Those JSV scripts can be installed globally on the qmaster (qconf -mconf / jsv_url parameter) or can be specified during job submission time with -jsv (client side jsv). With recent performance improvements when processing client side JSV scripts in UGE 8.1.7 it makes now sense to compare different scripting languages in which JSV scripts can be written.

With Sun Grid Engine or older Univa Grid Engine versions client side JSV script were slow, which was an issue when submitting massive amount of jobs in scripts. Now, depending on the scripting language the complete job submission time – including JSV processing within qsub - can go down to 38ms.

Because the Go programming language is fast and elegant I implemented the JSV protocol in a Go library, which I put under an open source license on my github account. You can get it from:

https://github.com/dgruber/jsv

Traditionally Grid Engine supports Java, TCL, Perl, and bash.

In order to compare the job submission time performance with a client side JSV I created a very basic script in Go, TCL, Perl and bash. Then I submitted jobs multiple times and measured the time with the “time” command line tool.

Following boxplots are showing the results. Not surprisingly Go (#golang) offers by far the fastest job submission performance (down to 38ms), while TCL and Perl are still good in performance. Bash is very slow but still can be used when not doing mass-submits.

NOTE: Without using JSV a qsub is still many times faster (down to 12ms in my VM). Using a multi-threaded submit tool based on DRMAA API (see an article here in my blog about Go DRMAA) I got an average per job submission time of 1-2ms.

The scripts are adding the core binding parameter for a job (-binding linear:1). Below you can find the JSV scripts which I used for testing submission performance. All jobs where submitted with:

qsub -b y -jsv ./<script> sleep 123

How to configure different prolog scripts for different hosts or host groups (2014-03-03)

When configuring Grid Engine a good tuning point is always to reduce the amount of queues (i.e. queue instances). When having a need for different machine type specific prolog and epilog scripts (for different heath-check for example) this does not require to configure multiple queues containing different prolog and epilog scripts. Instead you can configure them in the same queue using the bracket notation where you can define settings for queue instances (a queue setting for a host) and queue domains (a queue setting for a hostgroup).

Here is an example of how to configure different prolog scripts for different host groups

I‘ve configured two additional host groups: @centos and @suse11 for which I want to have different prolog scripts running before a job is started.

daniel@mint14:~$ qconf -shgrpl

@allhosts

@centos

@suse11

My prolog scripts just printing out something on stdout.

daniel@mint14:~$ cat /nfs/prolog_cent.sh

#!/bin/sh

echo "I'm a CentOS box"

daniel@mint14:~$ cat /nfs/prolog_suse.sh

#!/bin/sh

echo "I'm a SUSE box"

Now we can add those two prolog scripts in the prolog configuration of one queue. Here for the host-group @centos the /nfs/prolog_cent.sh script is set. The prolog for host-group @suse11 is set respectively(of course you can also just use an host name instead of a hostgroup).

daniel@mint14:~$ qconf -mattr queue prolog "NONE,[@centos=/nfs/prolog_cent.sh],[@suse11=/nfs/prolog_suse.sh]" all.q

daniel@mint14 modified "all.q" in cluster queue list

Now verify that the queue configuration was set in the right way.

daniel@mint14:~$ qconf -sq all.q | grep prolog

prolog NONE,[@centos=/nfs prolog_cent.sh], \

[@suse11=/nfs/prolog_suse.sh]

Finally we submit two jobs to two different hostgroups (i.e. here queue domains since the job is required to run in all.q).

daniel@mint14:~$ qsub -b y -q all.q@@suse11 /bin/sleep 0

Your job 8 ("sleep") has been submitted

daniel@mint14:~$ qsub -b y -q all.q@@centos /bin/sleep 0

Your job 9 ("sleep") has been submitted

Now check the job output file. It must contain the prolog output.

daniel@mint14:~$ cat /home/daniel/sleep.o9

I'm a CentOS box

daniel@mint14:~$ cat /home/daniel/sleep.o8

I'm a SUSE box

Main Memory Limitation with Grid Engine - A short Introduction into cgroups

One of my current projects is implementing cgroups in Univa Grid Engine. It will be available in the next releases (Univa Grid Engine 8.1.7 or 8.2). Control groups are a Linux kernel enhancement which provides some nice features for better resource management. Hence cgroups features can only be used on 64 bit Linux hosts (lx-amd64). This article is a short introduction into one of the supported features for Univa Grid Engine: main memory limitation for jobs.

The cgroups subsystems can be enabled in Grid Engine either on cluster global level or on host local level (for heterogenous clusters) in the host configuration. The current configuration is opened with qconf -mconf global or qconf -mconf <host-name>. You will note following new configuration parameter list:

> qconf -mconf global

...

cgroups_params cgroup_path=none cpuset=false mount=false \

freezer=false killing=false forced_numa=false \

h_vmem_limit=false m_mem_free_hard=false \

m_mem_free_soft=false min_m_mem_free=0

For enabling cgroups the cgroups_path must be set to the path on which the cgroups subsystems are mounted. On RHEL 6 hosts the default is /cgroup but /sys/fs/cgroup is also a frequently used directory. In order to enable specific subsystems or control their behavior the remaining configuration parameters in the Grid Engine host configuration have to be activated.

There are two ways to restrict main memory for a job:

hard limitation: All processes of the job combined are limited from the Linux kernel that they are able to use only the requested amount of memory. Further

malloc()calls will fail.soft limitation: The job can also use more memory if it is free on the host - but if the kernel runs out of memory because of other processes, the job is pushed back to the given limits (meaning that the memory overflow is swapped out to disk).

Hard memory limitation is turned on by setting the parameter m_mem_free_hard=true (soft limitation is activated respectively). That's it from the Grid Engine configuration point of view! Now when a job requests main memory with m_mem_free and the job is scheduled to an host with such a configuration, a cgroup in the memory subsystem is created automatically and the job is put in. The limit can be used for batch jobs, parallel jobs and interactive jobs. For parallel jobs the limit is set to the requested amount of memory multiplied by the granted slots for the jobs as expected.

Following example demonstrates a job which behaves well, meaning using less memory than requested hence the job remains unaffected by the limit. (Btw. memhog is a utility which requests a given amount of memory and frees it (repeatedly).)

> qsub -l h=plantation,m_mem_free=1G -b y memhog -r100 990m

Your job 4 ("memhog") has been submitted

> qacct -j 4

==============================================================

qname all.q

hostname plantation

...

jobname memhog

jobnumber 4

...

qsub_time Sun Aug 25 11:39:20 2013

start_time Sun Aug 25 11:39:26 2013

end_time Sun Aug 25 11:39:50 2013

...

slots 1

failed 0

exit_status 0

ru_wallclock 24

ru_utime 22.822

ru_stime 0.411

ru_maxrss 1014384

...

maxvmem 1015.188M

...

In the next example the job is aborted immediately because it used more main memory than requested:

> qsub -l h=plantation,m_mem_free=1G -b y memhog -r100 1050m

Your job 5 ("memhog") has been submitted

> qacct -j 5

==============================================================

qname all.q

hostname plantation

...

jobname memhog

jobnumber 5

qsub_time Sun Aug 25 11:41:53 2013

start_time Sun Aug 25 11:41:56 2013

end_time Sun Aug 25 11:41:57 2013

...

slots 1

failed 0

exit_status 137

ru_wallclock 1

ru_utime 0.504

ru_stime 0.315

ru_maxrss 1048452

...

maxvmem 1.050G

…

And finally an interactive session is showed. The interesting part here is that the interactive session as such is not aborted, only the command started by the interactive session is aborted due to the lack of memory.

> qrsh -l h=plantation,m_mem_free=1G

daniel@plantation:~> memhog -r4 1000m

....................................................................................................

....................................................................................................

....................................................................................................

....................................................................................................

daniel@plantation:~> memhog -r4 1050m

.......................................................................................................Killed

daniel@plantation:~> memhog -r4 1024m

.......................................................................................................Killed

daniel@plantation:~> memhog -r4 1023m

.......................................................................................................Killed

daniel@plantation:~> memhog -r4 1020m

......................................................................................................

......................................................................................................

......................................................................................................

......................................................................................................

daniel@plantation:~> exit

logout

>

There are two additional settings you should know about. Since the Grid Engine process which takes care about starting and tracking your application (sge_shepherd) is also part of the job and accounted in the memory it could produce additional overhead to the memory footprint of your job. Additionally it should be prevented that the user sets realistic low limits for the jobs. Both issues are addressed with the min_m_mem_free limit parameter. If you configure it to let's say 250M, each job gets at least a limit of 250M even the job requested only 10M. Nevertheless for job accounting with qstat or qacct only the job requests are considered. The second setting is the mount=true setting. If turned on the memory subsystem is tried to be mounted automatically by Univa Grid Engine if not already available under <cgroups_path>/memory. On a properly configured host this should be done already during system boot time but setting this parameter prevents from failures (or just simplifies things in some cases).

The other cgroups subsystems used by the upcoming Univa Grid Engine versions I will discuss in one of the next blog entries.

Tracing execd -> qmaster protocol with qping (2013-09-23)

Sometimes it is useful to display the communcation between two Grid Engine daemons. For example when load sensor values are not visable in qstat (or wrong values appear).

In order to print out a protocol trace you can connect to the execd with the qping tool, which is shipped with Grid Engine.

First you need to switch to the root account on the execd. Then you have to enable full reporting for qping by setting a specific environment variable:

export SGE_QPING_OUTPUT_FORMAT="s:12 s:13"

This enables columns 12 and 13. See qping -help for more information.

Then you can connect to the execd with following command:

qping -dump_tag ALL INFO myhostname $SGE_EXECD_PORT execd 1

Don't forget to source all Grid Engine environment variables (e.g source $SGE_ROOT/default/common/settings.sh, where $SGE_ROOT is the path to your installation) beforehand.

You will get an output like following when qping is executed:

open connection to "u1010/execd/1" ... no error happened

…

List: <report list> * #Elements: 2

REP_type (Ulong) = 1

REP_host (Host) = u1010

REP_list (List) = full {

List: <No list name specified> * #Elements: 8

-------------------------------

LR_name (String) = load_long

LR_value (String) = 0.070000

LR_global (Ulong) = 0

LR_static (Ulong) = 0

LR_host (Host) = u1010

-------------------------------

LR_name (String) = mem_free

LR_value (String) = 388.816406M

LR_global (Ulong) = 0

LR_static (Ulong) = 0

LR_host (Host) = u1010

-------------------------------

LR_name (String) = virtual_free

LR_value (String) = 779.726562M

LR_global (Ulong) = 0

LR_static (Ulong) = 0

LR_host (Host) = u1010

-------------------------------

LR_name (String) = mem_used

LR_value (String) = 103.550781M

LR_global (Ulong) = 0

LR_static (Ulong) = 0

LR_host (Host) = u1010

-------------------------------

LR_name (String) = virtual_used

LR_value (String) = 110.636719M

LR_global (Ulong) = 0

LR_static (Ulong) = 0

LR_host (Host) = u1010

-------------------------------

LR_name (String) = m_mem_used

LR_value (String) = 101.000000M

LR_global (Ulong) = 0

LR_static (Ulong) = 0

LR_host (Host) = u1010

-------------------------------

LR_name (String) = m_mem_free

LR_value (String) = 391.000000M

LR_global (Ulong) = 0

LR_static (Ulong) = 0

LR_host (Host) = u1010

-------------------------------

…

After a while you can see a similar output which is more or less self describing. You can see the reosurce name the values, the host where they come from, and some tags (if it is a cluster global value or a static complex).

Suspend and Resume Parallel Jobs in Grid Engine (2013-07-31)

Tightly integrated parallel jobs are under full control of Grid Engine (accounting/resource limitation/etc.). But what happens when a tightly integrated parallel job is suspended?